Content quality over content source

Either a work is inspiring or insightful, or it’s not. Stop qualifying the work by saying it was created by an LLM or another form of generative AI.

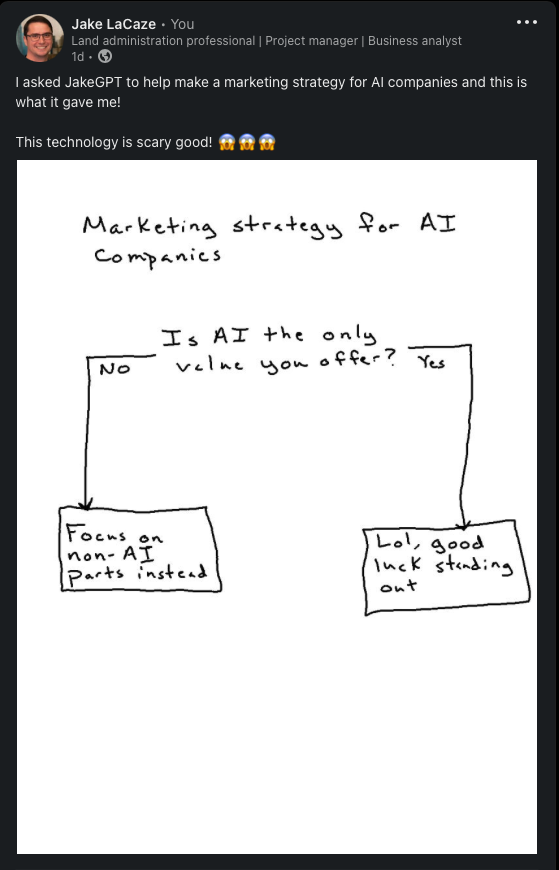

I recently made a tongue-in-cheek post on LinkedIn, directed as a jab at how some people give large language models (LLMs) too much credit simply because they’re machines.

This silly post got me thinking about content quality vs. content source.

If you disagree with the point of my post, that’s fine. You’re free to criticize it, poke holes in it, and tear it apart. I ask only that you would do the same if this post were created by an LLM like ChatGPT. Please don’t be one of those people who would think the post were insightful if written by a machine trained for countless hours on terabytes and terabytes of data. In this situation, the result is far more important than the process.

LLMs and other generative AI must be held to higher standards. We must stop pretending these models are smart just because they use so much data. Data alone is useless without critical thinking and insight. If the models and their algorithms are flawed, there’s only so much the models can do with more data.

My own model, JakeGPT, is trained on nearly 40 years of experience as a real-world human being, including a marketing degree and 15 months in tech marketing. JakeGPT may not have been trained on the largest dataset, but at some point, data is no longer the limiting factor–so more data is not the answer.

Until AI can replace humans everywhere, it will be necessary to relate to humans to influence them. Data and facts and figures–the strengths of AI–can go only so far. Humans still respond to story, and personal stories are more effective than the generalizations that LLMs churn out.

Personal story and insights are the strengths of JakeGPT. Sure, the model is flawed and unintentionally biased in its own ways. But so are models like ChatGPT. And JakeGPT needs less data, less training, and less electricity. And perhaps best of all, JakeGPT is less likely to empower bad actors looking to deceive or harm others. (But if JakeGPT does ever go rogue, it can’t be used for nefarious purposes at the same scale as other models.)

And the cherry on top: JakeGPT plays for Team Human.1